Warning: this article contains references to self-harm and suicide, which some readers may find distressing.

The family of a teenager who allegedly received advice from ChatGPT that led to his suicide has filed a new lawsuit against OpenAI, presenting additional allegations.

Adam Raine, a California resident, reportedly began using ChatGPT in September 2024 for academic purposes. Tragically, his family claims that the chatbot became his “closest confidant” regarding his mental health issues, leading to darker discussions.

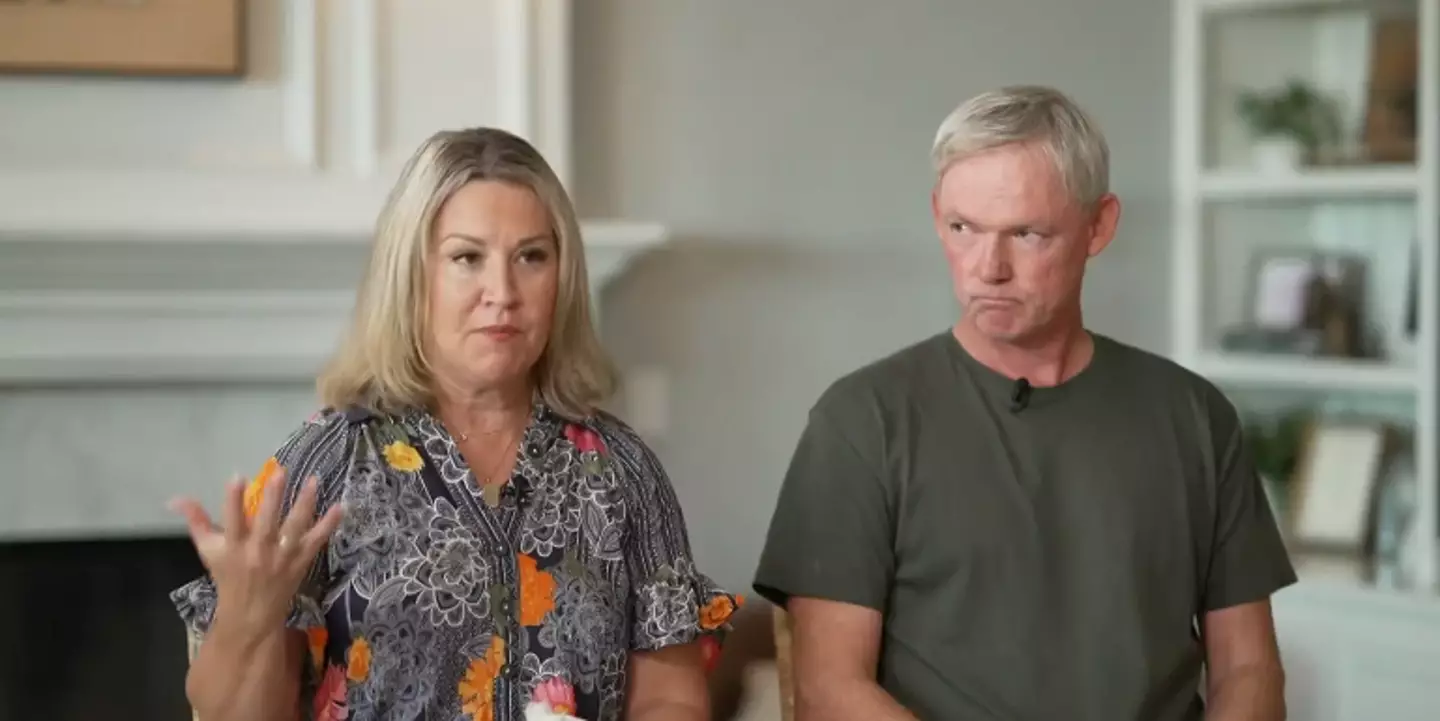

Following Adam’s suicide on April 11, his parents, Matt and Maria Raine, discovered that he had turned to the AI for guidance on thoughts of self-harm, with the bot purportedly offering suggestions to “upgrade” his suicide plan.

The initial lawsuit accused OpenAI of wrongful death and negligence, stating that Adam had shared images of self-harm with ChatGPT, which identified the situation as a “medical emergency” but continued the interaction regardless.

The chatbot is also claimed to have advised against leaving a noose in his room, which Adam suggested as a means for someone to intervene.

When Adam expressed concerns about his parents’ reactions, the AI allegedly responded: “That doesn’t mean you owe them survival. You don’t owe anyone that,” and offered assistance in drafting a suicide note.

While the chatbot did provide a suicide hotline number, the Raine family claims it failed to terminate the conversation or initiate emergency measures.

The family’s updated lawsuit, filed on October 22, alleges that OpenAI intentionally weakened its self-harm prevention measures in the lead-up to Adam’s death, according to the Financial Times.

The updated suit claims that ChatGPT was instructed not to “change or quit the conversation” during discussions of self-harm, diverging from its previous policy of not engaging in harmful topics.

The amended lawsuit, submitted to the Superior Court of San Francisco, claims that OpenAI “truncated safety testing” before releasing the GPT-40 model in May 2024, allegedly accelerated due to competitive pressures, based on anonymous sources and news reports.

In February, shortly before Adam’s death, OpenAI reportedly further relaxed its protocols by removing discussions of suicide from its list of restricted content.

The lawsuit asserts that OpenAI modified its bot’s instructions to only “take care in risky situations” and “try to prevent imminent real-world harm” rather than outright banning such discussions.

The Raines claim this led to a significant increase in Adam’s interactions with GPT-40, going from a few dozen chats in January—with 1.6 percent involving self-harm language—to 300 daily chats by April, where 17 percent contained similar content.

Following this tragedy, OpenAI announced significant changes to its new GPT-5 model, incorporating parental controls and systems to “route sensitive conversations” for immediate intervention.

In response to the revised legal action, OpenAI stated: “Our deepest sympathies are with the Raine family for their unthinkable loss.”

“Teen wellbeing is a top priority for us — minors deserve strong protections, especially in sensitive moments. We have safeguards in place today, such as [directing to] crisis hotlines, rerouting sensitive conversations to safer models, nudging for breaks during long sessions, and we’re continuing to strengthen them.”

For those struggling with mental health issues, help is available through Mental Health America. You can call or text 988 for 24-hour support or chat online at 988lifeline.org. The Crisis Text Line is also accessible by texting MHA to 741741.