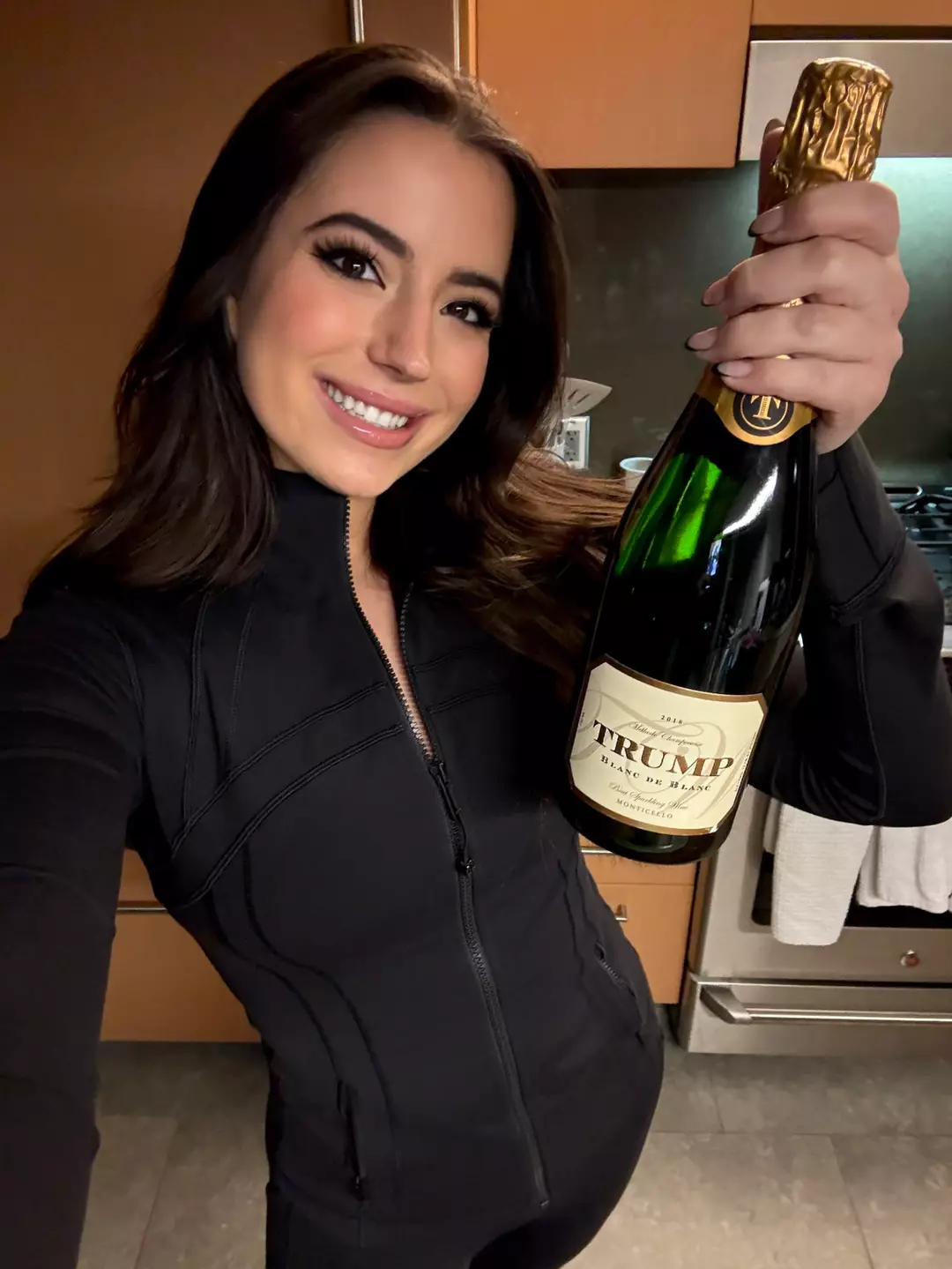

Ashley St Clair, the woman who is the mother of Elon Musk’s 14th child, is taking legal action against the tech tycoon, accusing his AI company, xAI, of enabling the creation of sexually explicit images through its product, Grok.

St. Clair initiated the lawsuit on Thursday, January 15, claiming that the platform ‘generated and circulated sexual deepfake images of her’ on the social media service X.

According to the legal documents cited by PEOPLE, the lawsuit states, “Defendant xAI, a tech giant with every tool and advantage at its disposal, has chosen to wilfully turn a blind eye and even celebrate the sexual exploitation of women and children.”

The lawsuit continues, claiming, “xAI’s product Grok, a generative artificial intelligence (“AI”) chatbot, uses AI to undress, humiliate, and sexually exploit victims – creating genuine looking, altered deepfake content of children.”

St. Clair’s complaint includes allegations that the AI technology produced sexually explicit images of her as both a child and an adult, leading her to swiftly reach out to X to request their removal.

The lawsuit mentions, “Grok first promised Ms. St. Clair that it would refrain from manufacturing more images unclothing her,” but it alleges this assurance was not upheld, claiming that Musk ‘retaliated against’ her by demonetizing her X account.

The lawsuit also claims that Musk instructed Grok to produce many more images of her, including ‘unlawful images’ of a graphic nature.

In a statement, St. Clair’s attorney Carrie Goldberg remarked, “xAI is not a reasonably safe product and is a public nuisance. Nobody has borne the brunt more than Ashley St.Clair. Ashley filed suit because Grok was harassing her by creating and distributing nonconsensual, abusive, and degrading images of her and publishing them on X.”

Goldberg added, “This harm flowed directly from deliberate design choices that enabled Grok to be used as a tool of harassment and humiliation. Companies should not be able to escape responsibility when the products they build predictably cause this kind of harm. We intend to hold Grok accountable and to help establish clear legal boundaries for the entire public’s benefit to prevent AI from being weaponized for abuse.”

The controversy over Grok’s capability to generate explicit images has been escalating, with nations such as the UK considering a ban on X if adequate measures are not implemented to ensure user safety.

Initially, Musk responded to the concerns by limiting Grok’s access to paid subscribers, yet this still permitted those who paid the subscription fee to generate explicit images, including those of minors.

Addressing the allegations regarding Grok’s generation of inappropriate images, Elon Musk stated: “Obviously, Grok does not spontaneously generate images, it does so only according to user requests.

“When asked to generate images, it will refuse to produce anything illegal, as the operating principle for Grok is to obey the laws of any given country or state.”

Musk concluded: “There may be times when adversarial hacking of Grok prompts does something unexpected. If that happens, we fix the bug immediately.”

Following further criticism, Musk agreed to remove the feature, though not globally. An additional announcement on Thursday stated: “We have implemented technological measures to prevent the Grok account from allowing the editing of images of real people in revealing clothing.”

“We now geoblock the ability of all users to generate images of real people in bikinis, underwear, and similar attire via the Grok account and in Grok in X in those jurisdictions where it’s illegal,” X stated.

With NSFW (not safe for work) settings activated, Grok is allowed to show ‘upper body nudity of imaginary adult humans (not real ones)’ in line with the level of nudity permissible in R-rated films, Musk wrote online on Wednesday.

“That is the de facto standard in America. This will vary in other regions according to the laws on a country by country basis.”

Representatives of xAI have been approached for comment.