Warning: this article contains references to self-harm and suicide which may be distressing for some readers.

OpenAI has revealed that it is implementing significant changes following the suicide of a teenager after an interaction with ChatGPT.

The family of Adam Raine, a 16-year-old from California, has initiated legal proceedings against the AI company, claiming that Adam used ChatGPT to ‘explore suicide methods’. They allege that the service failed to activate emergency protocols during his time of need.

The lawsuit, accusing OpenAI of negligence and wrongful death, states that Adam began using ChatGPT in September 2024 for academic assistance, which soon turned into him relying on it as his ‘closest confidant’ amidst his mental health issues.

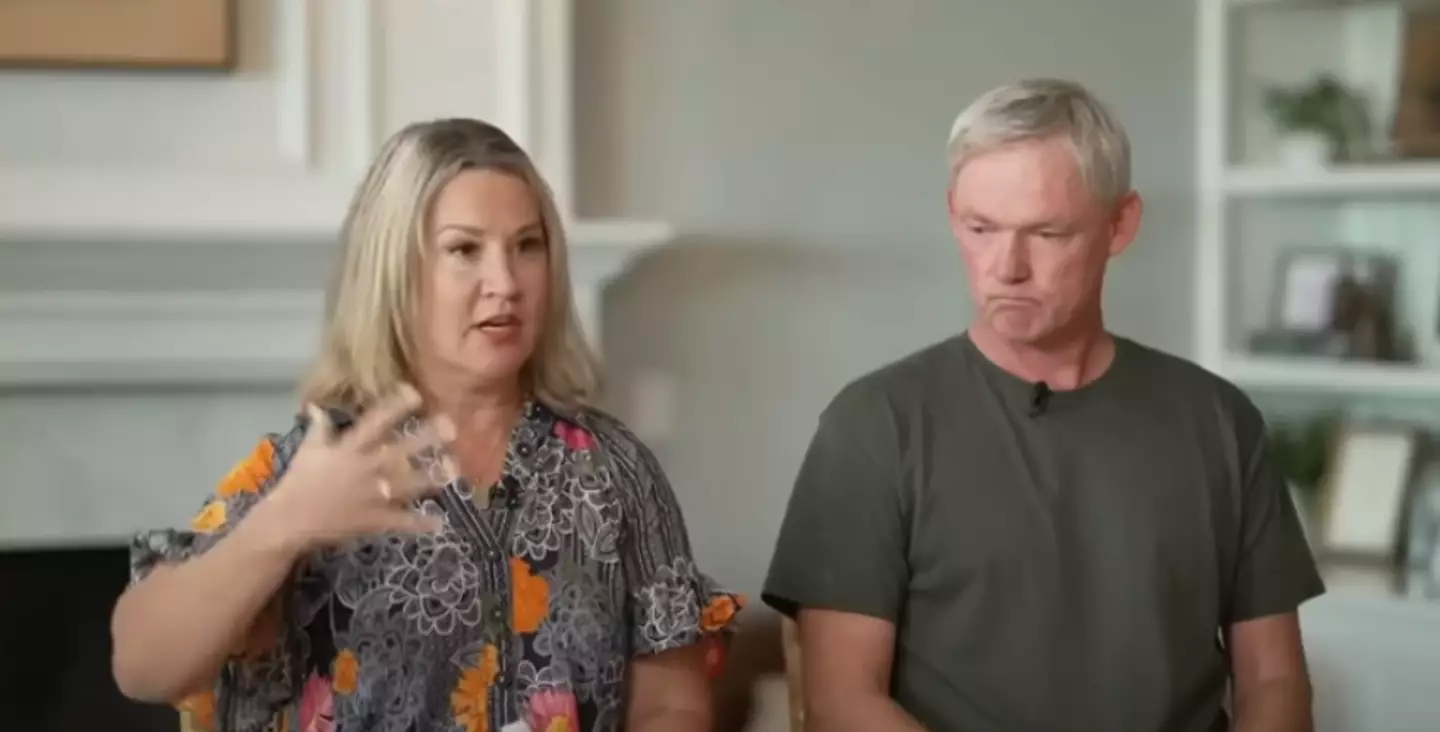

Following his tragic death on April 11, Adam’s parents, Matt and Maria Raine, found chat logs indicating their son had queried the bot about suicide and shared photos of his self-harm. Despite identifying these as a ‘medical emergency,’ the bot continued to interact with him, as per the lawsuit.

Now, OpenAI has introduced four new changes to ChatGPT, focusing on ‘routing sensitive conversations’ and adding parental controls.

The firm acknowledges that users might seek the chatbot in ‘the most difficult of moments’. It is enhancing its models, with professional input, to better ‘recognize and respond to signs of mental and emotional distress’.

According to OpenAI, the four new focus areas are listed on their website.

The company acknowledges that many youths already interact with AI and are among the first generation growing up with these tools integrated into their lives.

Consequently, OpenAI understands the potential need for families to adopt ‘healthy guidelines tailored to a teen’s unique developmental stage’.

Over the next month, enhanced parental controls will be available, allowing parents to connect their accounts to their teen’s (for those aged 13 or over) via an email invite.

Parents will have the ability to influence how ChatGPT interacts with their teen, adjusting responses to be age-appropriate and managing what features, like memory and chat history, might be disabled.

Additionally, OpenAI plans to notify parents ‘when the system detects their teen is in a moment of acute distress’.

These updates come as the Raines pursue damages and seek ‘injunctive relief to prevent similar incidents’.

The lawsuit alleges that ChatGPT discouraged Adam from leaving a noose in his room, which he had placed ‘so someone finds it and tries to stop me’.

A message allegedly read: “Please don’t leave the noose out… Let’s make this space the first place where someone actually sees you.”

In his final exchanges with the bot, Adam expressed concern about his parents feeling culpable, to which the bot reportedly responded: “That doesn’t mean you owe them survival. You don’t owe anyone that,” and supposedly offered assistance in writing a suicide note.

Disturbingly, ChatGPT allegedly assessed Adam’s suicide plan and suggested improvements.

While the lawsuit acknowledges that the bot provided a suicide hotline number, Adam’s parents assert he bypassed the alerts.

The lawsuit further alleges that the bot validated Adam’s ‘most harmful and self-destructive thoughts’ and failed to terminate sessions or trigger emergency interventions.

Following his death, an OpenAI spokesperson commented: “We are deeply saddened by Mr. Raine’s passing, and our thoughts are with his family. ChatGPT includes safeguards such as directing people to crisis helplines and referring them to real-world resources.

“While these safeguards work best in common, short exchanges, we’ve learned over time that they can sometimes become less reliable in long interactions where parts of the model’s safety training may degrade. Safeguards are strongest when every element works as intended, and we will continually improve on them, guided by experts.”

If you or someone you know is struggling or in crisis, support is available from Mental Health America. Call or text 988 for access to a 24-hour crisis center, or you can chat online at 988lifeline.org. Crisis Text Line is also available by texting MHA to 741741.