Donald Trump has declared that the Take it Down Act has been signed into law, providing a legal recourse for victims of deepfake pornography.

With the rise of artificial intelligence, individuals across the globe have misused it to produce explicit images of real people, including celebrities.

Last year, a significant incident involved the circulation of fake sexualized images of Taylor Swift, prompting many of her fans to demand action.

These AI-generated images have been shared on social media and pornographic websites, causing distress to the real individuals depicted.

In response, President Donald Trump has enacted a new law enabling those impacted to take legal action.

The Take it Down Act specifically targets this growing issue.

According to Congress, “This bill generally prohibits the nonconsensual online publication of intimate visual depictions of individuals, both authentic and computer-generated, and requires certain online platforms to promptly remove such depictions upon receiving notice of their existence.”

Although the primary focus is on AI image generation, the law also applies to genuine images or videos where individuals have not consented to their upload, commonly known as ‘revenge porn’.

Under the new legislation, creators or distributors of such content face ‘mandatory restitution and criminal penalties, including prison, a fine, or both’.

The bill further states, “Threats to publish intimate visual depictions of a subject are similarly prohibited under the bill and subject to criminal penalties.”

Moreover, the law mandates that social media platforms must remove such content within 48 hours of receiving notice from victims to avoid penalties.

Websites are given about a year to develop and establish a mechanism for users to report non-consensual content.

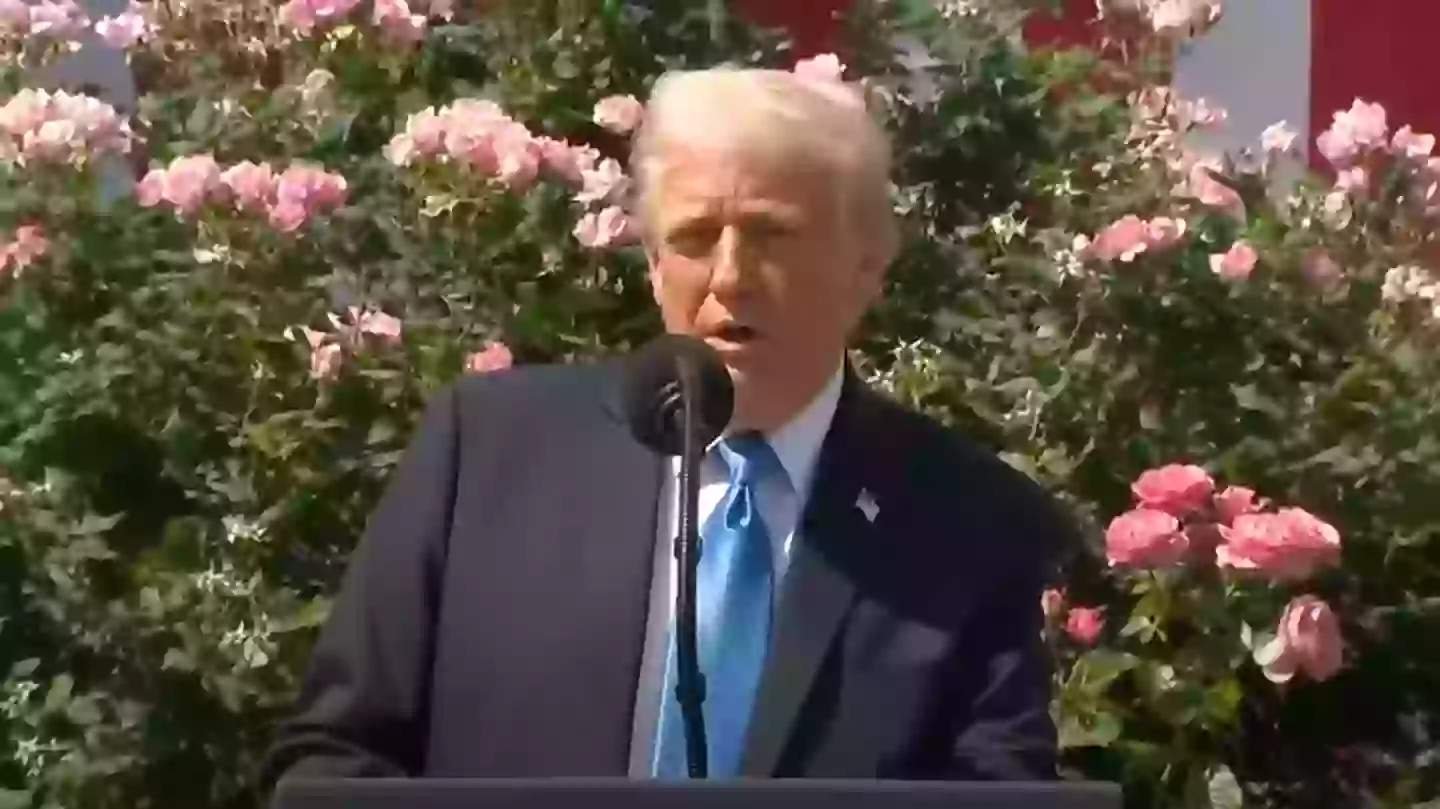

Speaking at the White House, Trump stated: “Today it is my honor to officially sign the Take it Down act into law, it is a big thing, it is very important, it is horrible what takes place.

“This will be the first ever federal law to combat the distribution of explicit imagery posted without subjects consent, take horrible pictures and I guess sometimes even make up the pictures then they post it without consent.

“This includes for forgeries generated by artificial intelligence, known as deep fakes, we have all heard of them.”

Trump further highlighted the significance of the bill in safeguarding women, noting: “With the rise of AI image generation countless women have been harassed with deep fakes and other explicit images distributed against their will.

“This is wrong, so horribly wrong and it is a very abusive situation, in some cases people have never seen [such things] before and today we are making it totally illegal.”

The Take it Down bill received overwhelming support, passing almost unanimously in both the US House of Representatives and the Senate.

First Lady Melania Trump, who had advocated for the bill’s passage since March, described the new law as a ‘national victory that will help parents and families protect children from online exploitation’ (via the BBC).

Senator Ted Cruz hailed it as a ‘historic win for victims of revenge porn and deepfake image abuse’, while the National Center for Missing & Exploited Children (NCMEC) praised the ‘groundbreaking’ law for addressing a critical gap concerning both real and digitally altered exploitative content involving children.

On social media, the law was met with approval. One user remarked: “Aye this is actually a good thing. First good thing he’s done all year.”

Another expressed that it was ‘something good out of this administration’, while a third commented: “He’s completely right to do this. A positive move.”